Written by: Arttu Huhtiniemi, DAIN Studios

Algorithmic decision-making is increasing rapidly across industries as well as in public services. By default, AI systems such as machine learning or deep learning produce outputs with no explanation or context. As the predicted outcomes turn into recommendations, decisions or direct actions, humans tend to look for justification. Explainable AI (XAI) provides cues to how and why the decision was made, helping humans to understand and interact with the AI system.

In many use cases, like with autocorrecting text input, there is hardly a need for explanation. But when one’s life or socioeconomic status depends on it, a blunt algorithmic statement with no rationale may feel threatening. Real use cases from cancer diagnostics to bank loan approvals require transparency and explainability in algorithmic decision-making.

The explanation should provide enough information for the user to either agree or disagree with the decision. As of today, there is no standard way of explaining machine learning models and the correct explanation also depends on the use case and type of data. For example, the user might be interested to know the following:

- What kind of data does the system learn from?

- How accurate is the output?

- How did the algorithm come to this decision or recommendation?

- Why weren’t other alternatives chosen?

- What are the consequences if the recommendation is used?

Simple as they may sound, these questions can be difficult to answer. What’s more, understanding how this information should be delivered to the user is far from obvious. Let’s look at two image-based applications that illustrate how XAI can work.

Case 1: Explaining emotion recognition with XAI layer

At DAIN Studios, we developed an experimental computer vision application called Naama. Essentially, Naama combines face detection, face recognition and emotion classifiers but it also contains an XAI layer that visualizes the algorithm’s logic.

We trained the system to detect consenting individuals with their name and real-time facial emotional states based on several categories e.g. angry, happy, neutral, sad and surprised. The model presents the average confidence for the emotional states, while another layer shows Naama’s algorithm unpacked, highlighting the facial points that led to the allocation of emotional categories.

The grey layer is an example of an explainable AI solution and illustrates a way to communicate the significance of pixels to the user. The darker the pixel in this layer, the more significant that area is for detecting the person’s sentiment.

Case 2: Explaining medical diagnosis with reference images

The main purpose of the XAI implementation may be to explain different model properties. Let’s say we have an image classification problem where each image belongs to one class. The goal is to use supervised training to create a model that predicts the class labels of new, unseen data. In this case, we can focus on explaining the training data, the model’s overall behavior or a single prediction.

In supervised learning, the model first tweaks its parameters until it gets good results with pre-labeled data. To understand the model, we need to understand the training data as it has a big impact on the accuracy and reliability of the model predictions. The data must be of good quality, there must be enough of it and it must be representative.

Before deployment, it is critical to evaluate how the model is performing with new data. Typically, we will check the accuracy using test data, but additional analyses of model failures and biases are worth considering. The better we understand the model, the easier it is for the model developers to fix the problematic parts and improve the overall model.

One way to estimate model performance when in the test phase or already in product use is looking at single predictions. The system processes one image at a time, predicting its label and estimating the reliability of the classification. High-quality models mostly produce correct results, but even the best models fail at times. Users might be hesitant to trust the model if it provides no reasoning: providing explanations for each prediction makes it easier to trust the model and interact with it. For example, to check that the model is focusing on the correct parts of the image as our cases demonstrate.

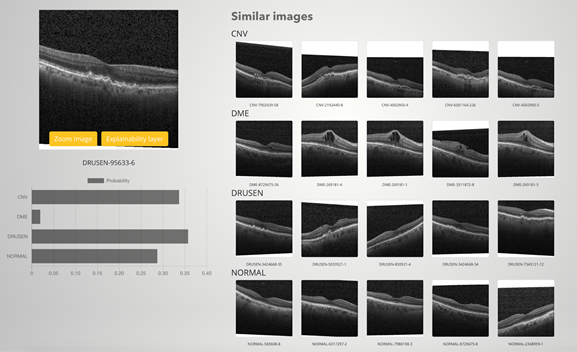

As an example of a single prediction, we have created an XAI demo system that matches an input image to similar images in the training set. A screenshot of this demo system in the medical imaging (Retinal OCT imaging) domain is shown below.

The query image can be seen on the top left, the name of the image below shows that the actual class is DRUSEN. The model’s prediction can be seen on the left as well and one can see that the classes CNV, DRUSEN and NORMAL get very similar scores. On the right, the five most similar images of each class are displayed. As one can detect, non-experts might have difficulties labeling the given query image because there are so many similar images in different categories. The pixel explanation layer together with similar images helps explain the result and assess its reliability.

Is XAI ready for use?

What we have learnt from these cases is that explainability can bring AI solutions to another level by providing more valuable outputs that bridge the gap between humans AI. The implementation of XAI methods, however, requires skillful engineering and data science know-how. In addition, it takes effort to really understand which additional pieces of information from XAI will benefit the use case.

It is worth keeping in mind that explainable AI is still mostly developed by researchers and forerunner companies. There is no plug-and-play method fit for any AI system, but a set of tools to address different explainability needs. Choosing and applying the right tools requires a great amount of expert knowledge. The benefits can be huge at best, as models that were not trusted by end users, may become useful.

At DAIN Studios we are researching the topic and we welcome you to read more thoroughly about XAI on one webpage.