Written by: Akseli Seppälä, University of Turku

If you are the slightest bit aware of ethical AI or AI governance, you’ve probably heard about principles such as transparency, explainability, fairness, non-maleficence, accountability or privacy. It is easy to agree with these principles – the real question is how should we translate them into meaningful actions?

How do organizations implement and deploy AI in a socially responsible way?

The research on AI ethics has been mostly conceptual, with a significant emphasis on defining the principles and guidelines of ethical AI. The lack of practical insights hasn’t gone unnoticed. Documents such as “Ethics Guidelines for Trustworthy AI” by The European Commission’s High-Level Expert Group on AI, or “Ethically Aligned Design” by the IEEE, already provide good starting points.

If the scientific literature provides little or no empirical results, how do we know what works in practice?

To find out, we interviewed experts from 12 organizations operating in Finland and routinely working with AI systems. All organizations either had AI systems in their own use or as a sales product, created AI systems for clients or provided AI-related services. Four of them operated in the public sector and eight in the private sector. The informants represented a range of managerial positions from CEOs to lead data scientists.

The interviews offered the primary source of information for this research, but we also explored publicly available material regarding companies’ AI policies, ethics guidelines and responsibility statements. We did this to understand the wider context of organizational practices affecting AI-related work.

Ethical principles should affect the everyday use of AI

The ethical principles are not just something to display on the office wall and gaze upon every now and then. They should be an integral, built-in feature in the everyday use of AI systems. We wanted to know how the selected organizations – forerunners in their field – addressed the ethical AI principles.

According to the EU’s Ethics guidelines for trustworthy AI, AI systems can include both technical and non-technical elements to support responsible use. Examples of technical safeguards include bias mitigation, continuous monitoring and rigorous testing and validation of the system. Non-technical approaches include standardization and certification, impact and risk assessments, governance frameworks, education and awareness building as well as diversity in AI design and development teams.

We asked the informants about the measures, mechanisms and practices their organizations had adopted to ensure the fair, ethical use of AI systems. The informants were also asked who were accountable for the use AI systems in their organization.

We identified four high-level categories of ethical AI principles

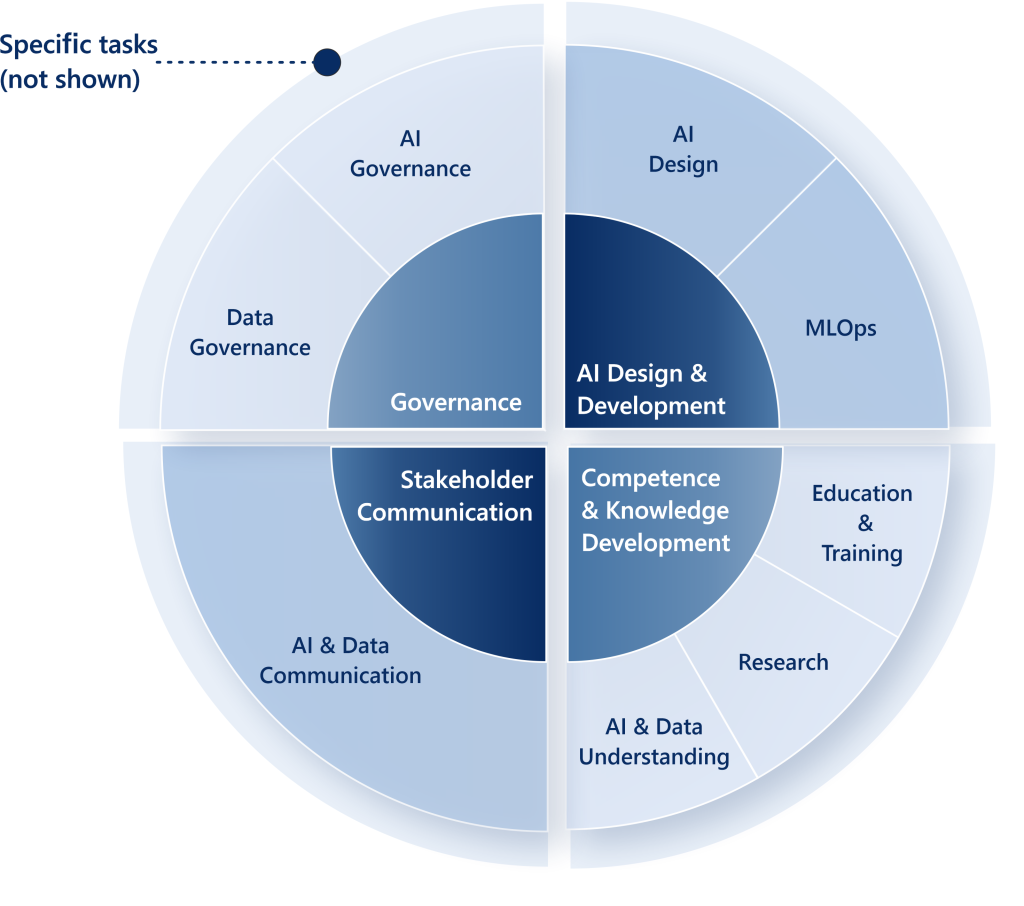

The 13 semi-structured interviews were transcribed, coded and analyzed following the Gioia method (see Gioia et al. 2013). This resulted in a list of practices that the organizations had adopted to implement ethical AI. We were able to produce four top-level categories to summarize these findings:

- Governance refers to the set of administrative decisions and practices organizations use to address ethical concerns regarding the deployment, development, and use of AI systems. The governance practices consist of data governance and AI governance.

- AI design and development refers to the set of practical methods and practices. The AI design and development practices consist of AI design and MLOps.

- Competence and knowledge development refers to the set of practices used to promote the skills, know-how, and awareness required to implement ethical AI. The competence and knowledge development practices consist of education and training, research, and AI and data understanding.

- Stakeholder communication refers to the set of communication practices organizations use to inform about their ethical AI practices, algorithms, or data. The stakeholder communication practices consist of AI and data communication

Balancing the benefits and risks related to AI deployments

Collectively, our results point out that the implementation of ethical AI includes several governance practices. The adopted methods are not merely technical, and there are various stakeholders involved.

In agreement with previous studies, we wish to highlight the role of practices such as risk assessment, competence development, and cross-functional collaboration. What’s more, our results underscore and further elucidate practices related to data governance, MLOps, and AI design.

We believe that large-scale implementation of AI standards, certificates and audits, as well as explainable AI systems, is yet to happen. Our findings represent a subset of the field that is in a formative stage. Different industries have different needs for AI and the acceptable risk thresholds vary depending on the application. It will be interesting to see how organizations continue balancing these risks and benefits as new regulations enter into force.

The organizations must start positioning themselves in the emerging ecosystems of responsible AI

The development of the field will be highly shaped by the AI Act – a proposal on the regulation of artificial intelligence by the European Commission. It’s time the organizations start positioning themselves in the future AI business landscape that involves responsible AI as a core component.

We acknowledge that the development, deployment, and use of the AI system often takes place in an ecosystem transcending organizational boundaries. Future research could focus on examining the inter-organizational activities related to AI governance.

Putting responsible AI into practice has been project AIGA’s motto from the start. Our research demonstrates that addressing the ethical AI principles requires actions at many organizational levels. It is a continuous process that touches other areas such as IT governance and data governance. Another future research direction would be to further explore the intersections of IT governance, data governance and AI governance.

Wish to find out more?

Click here to read the full conference paper or watch Akseli Seppälä’s ICIS 2021 presentation below.

The research described in this blog post was conducted by the AIGA consortium and the Digital Economy and Society Research Group (University of Turku, Finland).